Context

AI apps are notoriously "black boxes", making them difficult to debug. As more LLM-based products interact with a variety of APIs, tools, and users in multi-step workflows, developers need better ways to evaluate, monitor, and debug these systems.

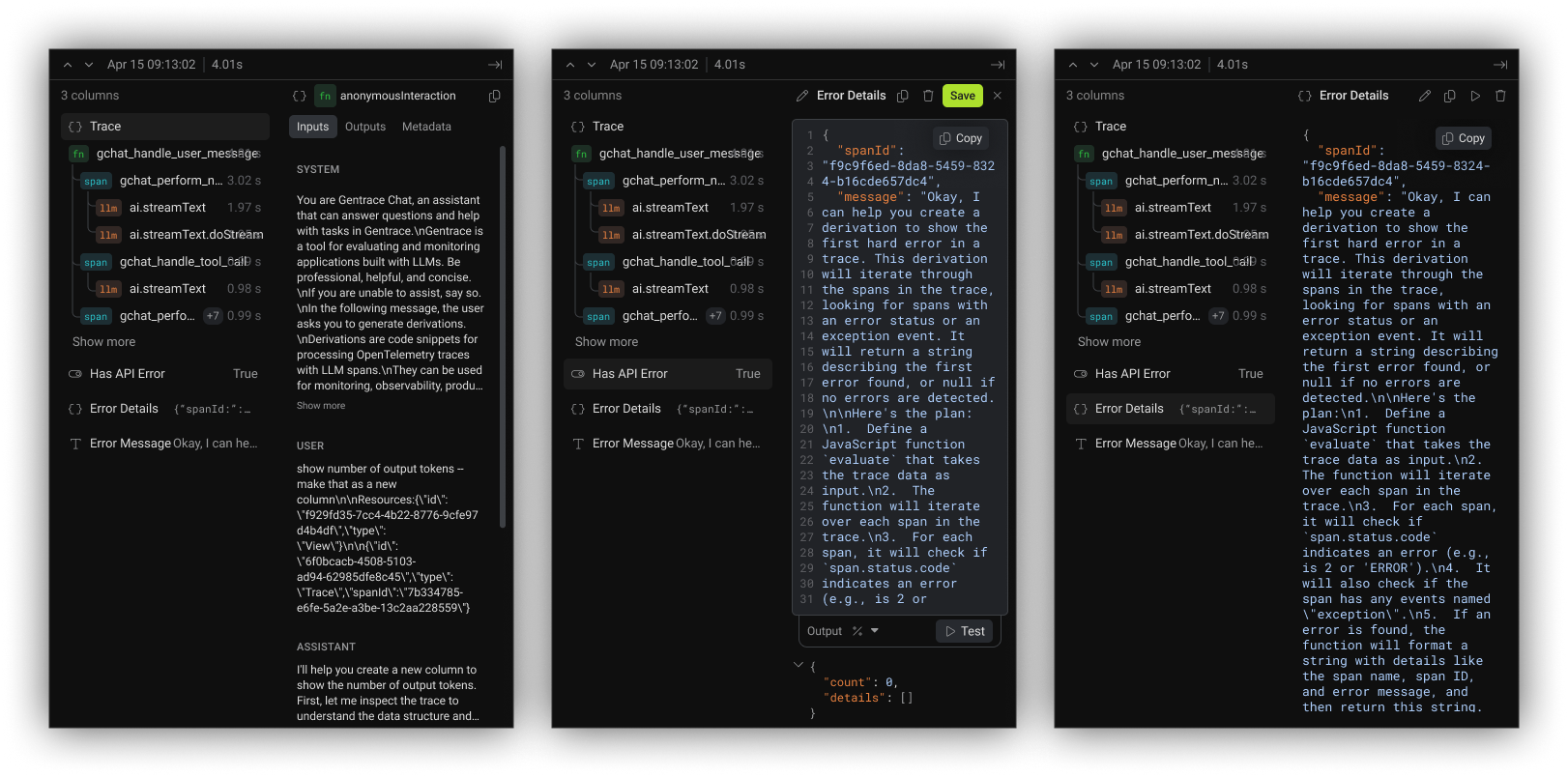

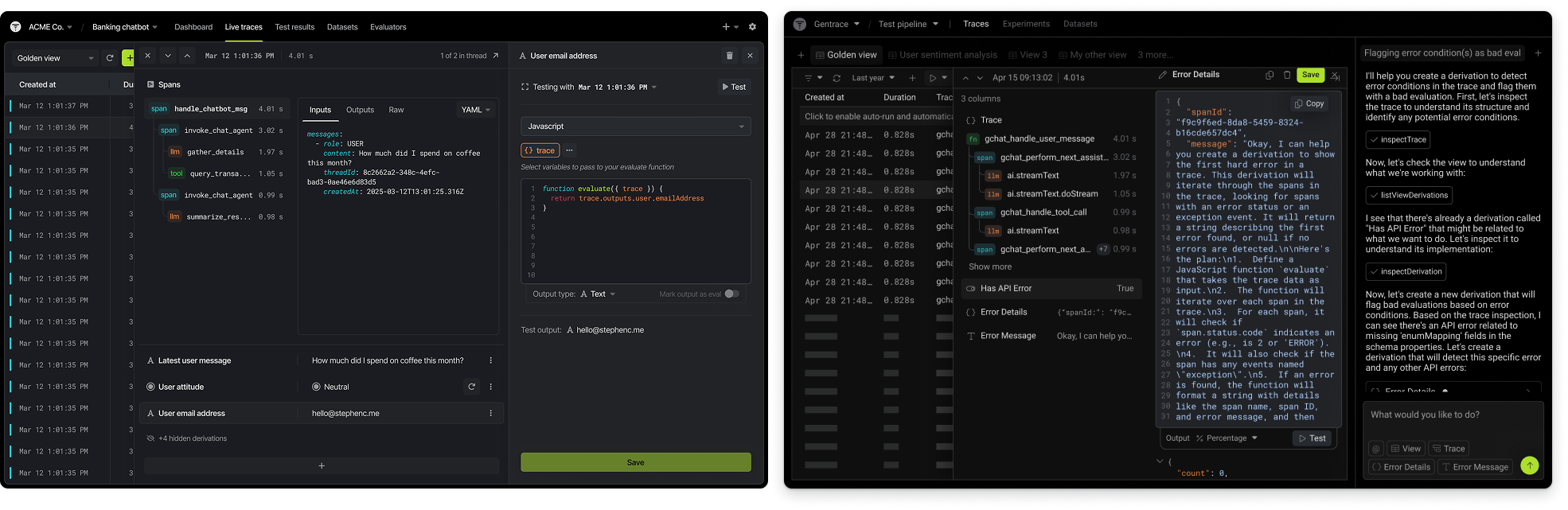

Gentrace is an AI observability platform that instruments LLM apps using the OpenTelemetry standard, recording traces for every AI interaction. Built as an AI-native tool, Gentrace lets developers query and chat with their traces to create new columns, custom views, filters, and alerts, turning opaque workflows into actionable insights for performance monitoring and error analysis.

Impact

As the sole designer in the startup, I shipped a variety of features around two main focuses:

- Shift the initial evals-focused product to trace logging

- Revamp the visual language to accommodate for the increased complexity of the new version.

Gentrace refocused its product to target agentic AI start-ups by shifting from purely evals, which are mostly static tests to benchmark model performance, to active trace logging on live interactions. I built new features were all AI-native and involved deep technical understanding of how AI interactions operate:

- Collaborating with a LLM (via chat) to create helpful custom values

- Applying filters and views to support workflows

- Visualizing OTEL performance by introducing the first graphing elements into the app

- Building alerts with email and Slack integration

These new features all introduced new panels and content sections that had to be fit into an increasingly cramped design system. To accommodate for this increased data density, I designed and shipped (in code) an extensive visual revamp to improve the clarity of the information architecture, while also making the product look more modern.